If our definitions of artificial intelligence differ, it's because you are wrong.

Don't worry, it's not your fault. Or maybe I am hanging on to an ancient definition and history will prove you right in the same way that Donald Trump did not go to jail in 2024. I'm still right, but nobody will care anymore.

Let me explain.

One of the earliest thought experiments of AI was the Turing test. In short, it was formulated that we can talk about "artificial intelligence" when a machine can hold a conversation with a human, and the human can't tell it wasn't talking to a machine.

Contrary to popular opinion, nothing we made passed is yet to pass a Turing test. No, really. You don't even need a Blade Runner cop to make a machine fail one

Artificial Intelligence is a broad field of study that encompasses mathematics, computing, linguistics, psychology, and nerditude of terrifying, dizzying depth.

These days, you may be excused for thinking that large language models are artificial intelligence. That's where the whole you being wrong about AI thing comes up, big time.

Large language models are produced with AI. They are used by AI programs to generate the conversations we inflict on ourselves because we haven't discovered quite where hell is exactly just yet, but they still only represent a subset of a single part or a single discipline of AI. Large language models are a form of neural network.

A powerful, monstrously powerful kind of neural network, with spiky hair and a studded collars on faded Denim jackets. Sure, fierce and badass in the way they're waving that smashed beer bottle in your face... but still just neural networks. Just one small part of the great dream that is Artificial Intelligence.

On second thought, though, maybe you are right. Maybe large language models are not the AI we wanted, but the AI we deserve.

You see, with a gun to my head I could list maybe a dozen people I know who'd pass the Turing test. To put that into perspective, between 2019 and 2023, I interviewed some 700 applicants for various position at our company. I talk to a lot of people.

So, if most people can't, on their best day transcend the limitations of their context, why be so hard on artificial intelligence for not passing a test a dead mathematician dreamed up 70 years ago?

Why does it enrage me so that people proudly state they understand how generative platforms work? After all, technically, they are right. Generation is the technical process of making something based on a set of specifications.

t's because that's not what those words mean to you. It's not what Artificial Intelligence was supposed to deliver. Come to it, intelligence is not really living up to its promise much either.

So, I retreat into pedantry, and cuddle my words, and we wait for the safety of dawn.

The dawn of A day when we don't mislabel generative to mean creative, for example. See? I told you that you were wrong. My self doubt was entirely staged for maximum theatrical effect.

So let's shape the terms of a new, mature way of discussing artificial intelligence and its merits, shortcoming, and potential. Let's start by executing all management and sales people, they deform such topics with their greed and sheer idiocy.

I kid, you can keep a couple of managers.

Artificial Intelligence is already an embedded, daily companion. It has been for decades. How do you think Google managed to sort through billions of records to find your celebrity foot pictures? AI doesn't need to be bigged up, and it doesn't need to be treated like a sport, with bated breath and thumping heartbeats at every announcement.

We all need to chill and make sure we understand this thing that has been reshaping our lives for decades, and that may end up making them much worse if we don't all understand what we're talking about

There is a very mature, incredible conversation to be had about AI, even in its current state, large language models and all.

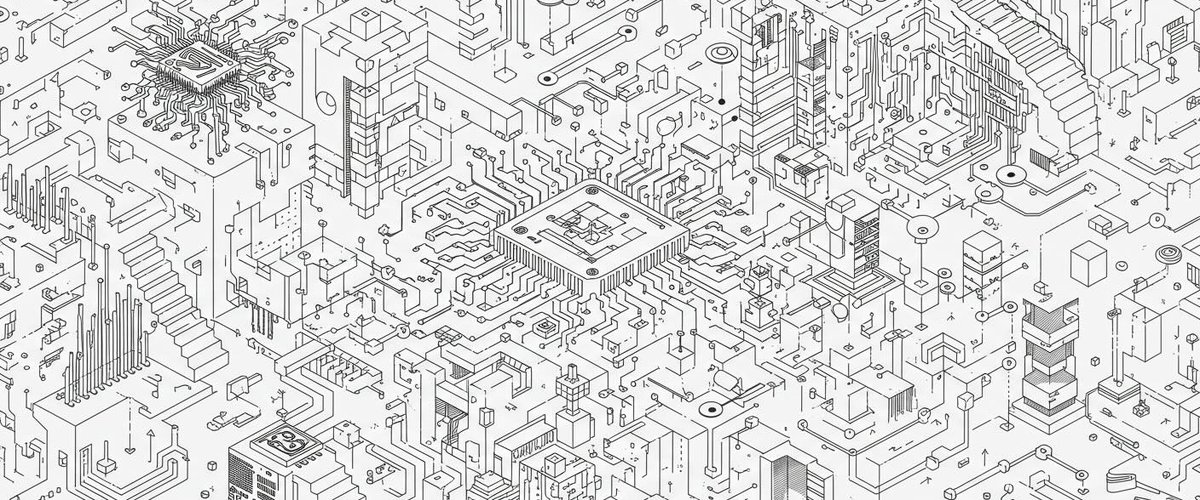

Generative intelligence is an incredible work partner. It created the image that comes with this post. It's helping me code several projects. However, generative intelligence is nowhere near general intelligence, and neither will ever approach human reasoning. Your machines are not people, and it will never be.

Why would you want them to be? Let's start having that conversation from there. Why parrot human intelligence? What precisely is the purpose of creating machines that are just like us, and who waste billions of calculations a second being just like us?

What is the point in creating more human brains? We have enough, and the can't even hold on a decent conversation.

It would be interesting to see what the results would be if we dropped this arrogant quest for a digital mirror of what we consider intelligence. It would be spectacular if we unshackled our research from the constraints of pretending to be human. Let's try for better, beautifully alien intelligence. I bet it's going to teach us a thing or two.